Overview

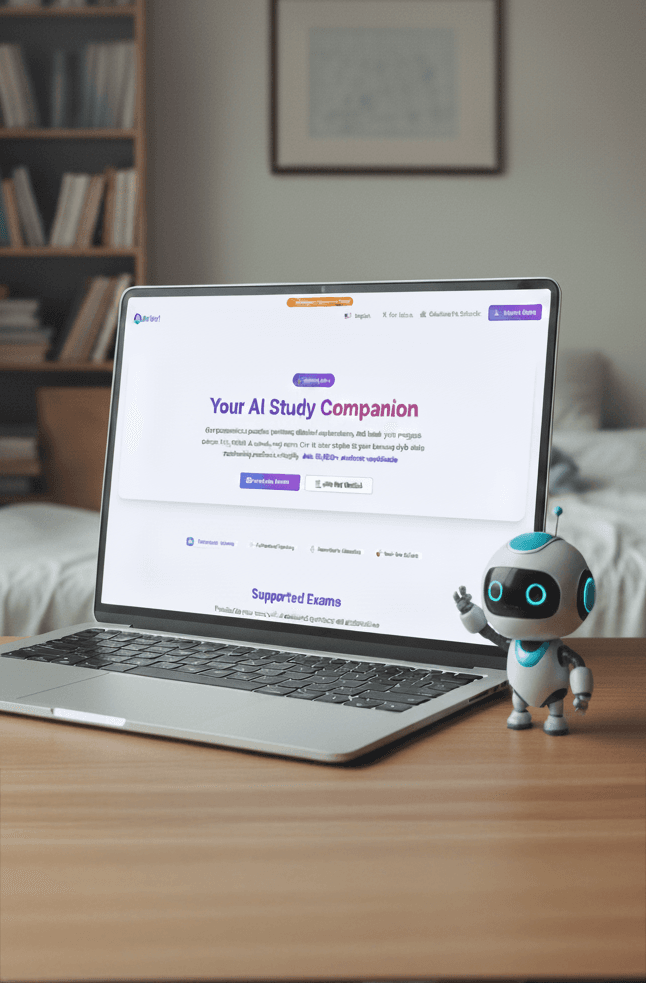

Artori is an AI-powered study platform that tracks student progress while preparing for exams, uses AI to analyze their learning patterns, plans personalized next steps, suggests structured exam simulations, and provides instant answers when students encounter doubts—eliminating the "frozen student" moment that has persisted since 1999.

Timeline: 1 month (80 hours, part-time) | Role: Solo Product Designer, Researcher, Manager & Technical Implementer | Status: Live MVP at artori.app (90% functional, awaiting full production resources)

The Problem

Students have been stuck in the same cycle for 25+ years:

From my own experience in 1999 using 400-page printed study guides, through working at educational companies (2003–2025), including the preparation for my Irish driving license in 2019—the pattern never changed:

- Students study alone with static materials (books, PDFs, videos and some static APPs)

- When a question arises, they're frozen—no one to ask, no immediate guidance

- Search tools (Google, etc.) have been accessible to students for 20 years but remain too time-consuming: Students waste precious study time sifting through irrelevant results trying to find the right explanation

- Generic AI tools (ChatGPT, etc.) are unreliable for exam prep: They hallucinate answers, provide information beyond the exam scope, and lack accountability—students can't trust if the answer is correct

- For some exams (e.g., driving tests), they resort to memorization rather than true understanding

- Teachers/tutors have no visibility into where students struggle in real-time

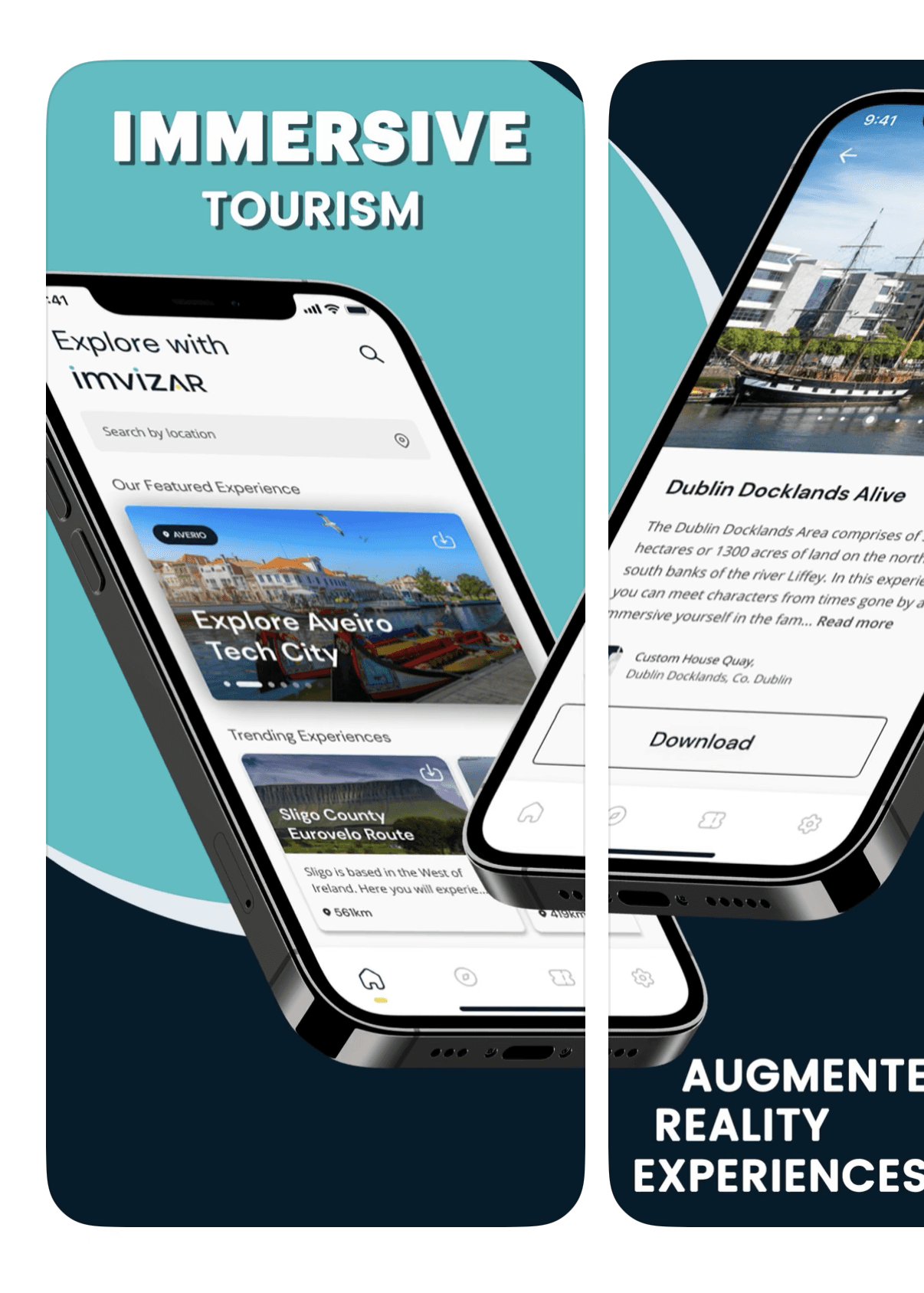

The gap: Existing platforms offer content or simulate exams, but none provide intelligent, real-time guidance that adapts to each student's unique learning journey while building genuine comprehension.

Constraints & Context

This project was built during the Maven AI Product Manager Bootcamp under significant real-world constraints:

Technical:

- First time using SnapDev (AI low-code platform) for full-stack development

- Had to learn and decide every architectural choice: Next.js vs vanilla JS, Python backend, MongoDB vs MySQL, Vercel hosting, AI integration without recurring fees

- Working solo meant being responsible for frontend, backend, database, authentication, admin panels, and AI implementation

Resource:

- 80 hours part-time over one month

- Self-funded (awaiting resources for production-grade AI tools and hosting)

- No dedicated development team

Learning Curve:

- First time leading a full-stack product end-to-end

- Had to understand why AI tools made certain decisions, not just accept outputs blindly

- Managed AI as a collaborator, not a magic solution

Goals & Success Criteria

User Goals:

- Students receive instant, contextual answers when stuck

- Personalized study plans based on actual performance patterns

- Build real understanding, not just memorization

- Track progress toward exam readiness

Business Goals (B2B2C Model - Schools/Institutions):

- Launch pilot in Brazil with select schools

- Validate concept with small student cohorts before scaling

- Establish marketplace model connecting students with tutors

Quantifiable Success Metrics:

- Learning Outcomes: +15% average improvement from mock-to-real exam performance

- Engagement: High weekly active usage + study plan completion rates

- Retention: Low drop-off rate indicating genuine value

- B2B Adoption: Client adoption and renewal rates

- Technical Quality: Near-zero AI hallucination through exam-grounded RAG

- Scalability: Revenue-per-student growth, NPS vs competitors, time-to-integration for new schools

My Role & Collaboration

Solo Product Designer & Builder:

- Product Strategy: Defined vision, scope, and go-to-market approach

- User Research: Conducted social listening via Perplexity AI, filtered real user pain points

- UX/UI Design: Designed all user flows, interfaces, and interactions

- Technical Implementation: Built frontend (SnapDev AI low-code + VS Code) deployed on Vercel, backend (Python, SnapDev plugin + VS Code) deployed on Render, database (MongoDB), authentication, admin dashboards

- AI Integration: Designed an AI chat system (OpenAI API) for answering student questions that will be RAG-based on the future

- Project Management: Managed backlog, version control, and development cycles

AI Tools Used:

- ChatGPT: Organizing ideas, PRD assistance

- Perplexity AI: Social network research, solution validation

- Notion: Documentation

- SnapDev: Frontend and backend development

- VS Code + AI Agents: Custom backend + frontend logic

- OpenAI API: For chat during the mock exams

Process & Key Design Decisions

1. Research & Discovery

Method: Social listening + competitive analysis via Perplexity AI

Focus: Filtered conversations on social networks to identify real complaints and unmet needs without formal user interviews initially

Key Insights:

- Students feel abandoned when stuck during self-study

- Existing platforms focus on content delivery or testing, not guided learning

- Teachers lack visibility into student struggles in real-time

- Memorization is prioritized over comprehension due to lack of personalized guidance

1.1 Persona Lucas

1.2 Persona Ana

2. Ideation & Scoping Challenge

The Pivot Moment:

Mid-project, I realized the scope was far larger than anticipated. Without an Effort Matrix at the start, I didn't predict how complex the full vision would become.

Decision: Rather than delay indefinitely, I prioritized an MVP that could demonstrate core value:

- AI-powered Q&A when students are stuck (solves the "frozen" problem)

- Study tracking and progress analytics

- Exam simulations with intelligent feedback (AI Tutoring)

- Admin panels for institutional oversight

- Multi-language support

Deferred for Future Phases:

- Full tutor marketplace integration

- Advanced AI analytics dashboards

- RAG Implementation

3. Technical Architecture Decisions

Unlike typical AI users who don't question why tools make certain choices, as a product person I needed to understand every architectural decision to maintain control and fix issues when they arose:

Why Next.js? → Better performance and SEO for a web-based study platform

Why Python backend? → Optimal for AI integration and data processing

Why MongoDB? → Flexible schema for evolving student data models

Why Vercel? → Seamless deployment for Next.js projects

How to use AI without recurring fees? → Designed RAG system grounded in exam content to minimize hallucinations and API costs

4. Designing the AI Interaction

Core Philosophy: AI suggests. Humans decide. Students learn.

Key Features Designed:

- Contextual Q&A: Students ask questions; AI provides exam-grounded answers (not generic responses)

- Study Plan Suggestions: AI analyzes performance patterns and suggests next steps

- Transparency: Students see why the AI recommends certain topics

- Human-in-the-Loop: Future tutor integration ensures AI accuracy is monitored by educators

Managing AI Limitations:

- Designed error states for when AI doesn't have sufficient data

- Soon I will implement RAG (Retrieval-Augmented Generation) to ground answers in verified exam content and

- Build feedback loops for students and tutors to flag incorrect responses

5. Crisis & Recovery: The Version Control Disaster

What Happened:

Working solo without a formal backlog, I got swept up in new ideas. One week, I forgot to push to Git. A terminal command accidentally erased all files.

How I Recovered:

After hours of panic, I remembered Mac Time Machine was active and restored the project.

Critical Learnings Implemented:

- Created a formal backlog (even for solo work)

- Committed to Git after every completed task

- Established daily version control discipline

The Solution

Artori is a web-based AI study companion that provides:

- Intelligent Study Tracking: Monitors student progress, identifies weak areas, and adapts study plans in real-time

- AI-Powered Q&A: Instant, contextual answers when students encounter questions—eliminating the "frozen" moment

- Structured Exam Simulations: Practice tests that adapt to student performance with detailed feedback

- Institutional Dashboards: Schools and tutors gain visibility into student progress and intervention points

- Future Marketplace: Connects students with tutors who monitor AI accuracy and provide human oversight

Screens

Student Dashboard

Setting up a Mock Exam

Mock Exam controls

AI Question Explanation

Presenting the possibility of calling the AI-Tutor

Admin Dashboard

Managing the Exams

And questions

Tutor Dashboard

Current Status (MVP Complete):

- Frontend: Live and functional, deployed on Vercel for Free

- Backend: Python-based, deployed on Render for Free

- Authentication: Login/signup operational

- Admin Panels: Institutional oversight and exam management tools ready

- AI Chat: Q&A system functional using OpenAI API

- Awaiting: Production-grade AI API plan, RAG system and professional hosting for full public launch

Impact & Validation Plan

Pilot Launch Strategy (Brazil):

- Partner with select schools for controlled beta testing

- Small student cohorts (20-50 students per school)

- Gather qualitative feedback and quantitative performance data

- Iterate based on real-world usage before scaling

Expected KPIs (Post-Pilot):

- +15% improvement in mock-to-real exam scores

- 70%+ weekly active usage among enrolled students

- <20% drop-off rate within first month

- 80%+ institutional renewal rate after pilot

- Near-zero AI hallucination through exam-grounded RAG

- NPS 50+ vs competitor platforms

Key Learnings

1. Scope Management is Critical—Even Solo

Without an Effort Matrix at the start, I underestimated complexity. Next time: prioritize ruthlessly from Day 1, ship smaller but functional first.

2. Process Discipline Matters for Solo Projects

I learned the hard way that version control and backlog management aren't just for teams—they're survival tools for solo builders. Now: commit after every task, maintain a backlog religiously.

3. AI is a Collaborator, Not Magic

Building with AI low-code tools taught me that understanding why decisions are made is essential. You must manage AI outputs, not blindly trust them, because you'll be the one fixing issues when they arise.

4. Real Validation Requires Real Users

No amount of research replaces putting the product in students' hands. The pilot phase isn't just a formality—it's where assumptions get tested and the product evolves into something genuinely valuable.

5. Building Full-Stack Changed My Design Perspective

Understanding technical constraints firsthand made me a better product designer. I now design with implementation realities in mind, balancing ambition with feasibility.

Live Project

🔗 artori.app (MVP complete, awaiting production resources)

This case study demonstrates product thinking across research, design, technical implementation, and strategic planning—showcasing the ability to build end-to-end AI-powered solutions under real-world constraints.